使用Elasticsearch分析腾讯云EO日志

腾讯云EO可以查看一些指标信息,但是更加详细的信息需要我们下载离线日志自行分析。

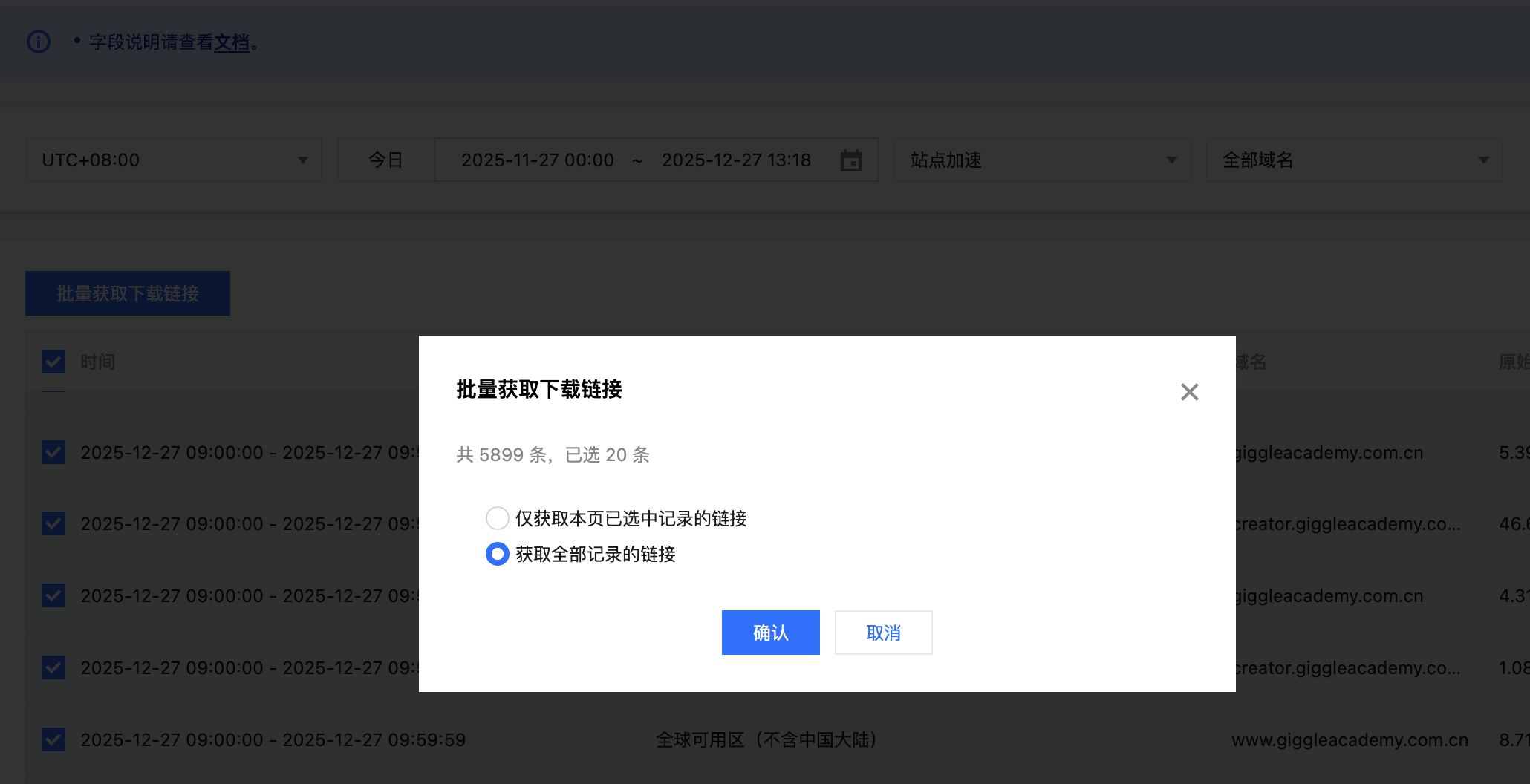

获取日志下载链接

腾讯云会将日志打包为.gz格式,解压后文件会包含多行,每一行都是一个JSON格式的数据,对应一条EO的请求日志,日志格式可以参考腾讯云文档。

我们可以批量获取最近一个月的日志下载链接

之后复制所有链接并保存到urls.txt文件中。

启动Elasticsearch集群

我们参考官方文档使用docker来启动集群,首先下载.env和docker-compose.yml,之后在.env文件中设置es和kibana的密码都是123456,然后设置STACK_VERSION=9.2.3。考虑到数据量比较大,可以提高容器的内存大小,我这里设置了一台8G。

1 | # Password for the 'elastic' user (at least 6 characters) |

设置好了之后使用命令docker-compose up -d启动ES集群。

之后可以通过http://127.0.0.1:5601访问kibana,用户名elastic,密码123456。

写入日志

使用如下的代码下载解析日志,并保存到ES中

1 | import gzip |

执行如上代码,就能够下载日志并保存到ES了(这会花费比较多的时间,我这里花费了100多分钟)。

分析日志

数据索引完毕之后,我们可以查看索引信息

1 | ~ curl 'https://127.0.0.1:9200/eo_logs/_count' --header 'Authorization: Basic ZWxhc3RpYzo9dk5Cc0QwSTNZRWFPa2RoZFFhZg==' -k |

可以看到一共索引了3000多万条数据,我们还可以查看索引的mapping和详细信息如下

1 | { |

具体每个字段的含义如下

| 字段名 | 含义 | 说明 |

|---|---|---|

| ClientIP | 客户端 IP | 访问 EdgeOne 边缘节点的真实用户 IP |

| ClientISP | 客户端运营商 | 用户网络所属运营商,如电信、联通、移动 |

| ClientRegion | 客户端地区 | 用户所在国家或地区 |

| ClientState | 客户端省份/州 | 用户所在省份或州级行政区 |

| ContentID | 内容标识 | EO 内部用于标识访问资源的唯一 ID |

| EdgeCacheStatus | 缓存状态 | 边缘节点缓存命中情况:Hit / Miss / RefreshHit / Bypass |

| EdgeFunctionSubrequest | 子请求数量 | 边缘函数触发的内部子请求次数 |

| EdgeInternalTime | 内部处理耗时 | 边缘节点内部处理请求所消耗的时间(毫秒) |

| EdgeResponseBodyBytes | 响应体大小 | 返回给客户端的响应 Body 字节数 |

| EdgeResponseBytes | 响应总大小 | 返回给客户端的总字节数(Header + Body) |

| EdgeResponseStatusCode | 响应状态码 | 边缘节点返回的 HTTP 状态码 |

| EdgeResponseTime | 总响应耗时 | 从边缘节点接收请求到完成响应的总耗时(毫秒) |

| EdgeServerID | 边缘节点 ID | 实际处理请求的 EdgeOne 节点标识 |

| EdgeServerIP | 边缘节点 IP | 实际处理请求的边缘节点 IP 地址 |

| ParentRequestID | 父请求 ID | 关联内部转发或子请求的父级请求标识 |

| RemotePort | 客户端端口 | 客户端发起连接时使用的端口 |

| RequestBytes | 请求大小 | 客户端请求报文大小(字节) |

| RequestHost | 请求域名 | 客户端请求的 Host 域名 |

| RequestID | 请求 ID | EdgeOne 为请求生成的唯一标识 |

| RequestMethod | 请求方法 | HTTP 请求方法,如 GET、POST |

| RequestProtocol | 请求协议 | 使用的 HTTP 协议版本(HTTP/1.1、HTTP/2、HTTP/3) |

| RequestRange | Range 请求 | 请求头中的 Range 字段,用于分段或断点下载 |

| RequestReferer | 来源页面 | 请求头中的 Referer 信息 |

| RequestStatus | 请求状态 | EdgeOne 定义的请求处理状态 |

| RequestTime | 请求时间 | 请求到达 EdgeOne 的时间 |

| RequestUA | User-Agent | 客户端 User-Agent 信息 |

| RequestUrl | 请求路径 | 请求的 URL 路径(不包含查询参数) |

| RequestUrlQueryString | 查询参数 | 请求 URL 中的 Query String |

| _import_time | 导入时间 | 日志被导入 Elasticsearch 的时间 |

| _source_file | 日志来源 | 生成该日志的原始文件或对象标识 |

然后我们想看指定域名的请求耗时情况(从EdgeOne接收到客户端发起的请求开始,到响应给客户端最后一个字节,整个过程的耗时,对应字段EdgeResponseTime),可以使用如下DSL

1 | POST /eo_logs/_search |

得到结果如下

1 | { |

我们重点关注百分比:

| 百分位 | 含义 | 解读 |

|---|---|---|

| p50 | 5 ms | 一半请求 5ms 内完成(极快) |

| p90 | 25 ms | 90% 的请求很健康 |

| p95 | 74 ms | 95% 的请求 < 100ms(优秀) |

| p99 | 594 ms | 1% 请求接近 / 超过 0.5s |

可以看到这个域名的请求速度还是很快的。

此外,我们还可以分析哪些资源的下载比较慢

1 | POST /eo_logs/_search |

我们可以针对上面查询到的慢速URL去做特定的优化和缓存预热。只是,上面的这个DSL不够严谨,因为单纯使用请求时间来判断速度快慢是不足够的,请求时间也会受到资源大小的影响。因此,我们使用资源的大小比上请求耗时,这个就代表这个资源的下载速度,之后我们从小到大排序,就可以知道哪些资源可能会下载比较慢了。具体DSL如下

1 | POST /eo_logs/_search |

根据上面的查询结果,我们就可以知道哪些资源的下载速度可能比较慢,之后就可以针对这些URL对应的资源去做专门的优化了。